这几年Apple一致再大力推动AR这个方向的应用,正好我对于AR渲染、人脸检测等技术应用都有一些兴趣,这里就利用这个ARKitDemo工程来探究一下ARKit的人脸跟踪的能力。

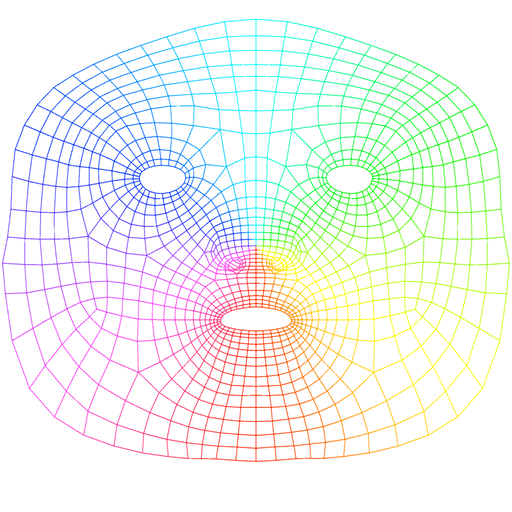

在Apple的Tracking and Visualizing Faces工程中内置了一张2048*2048的2D标准Facemesh,在常规的人脸贴图应用就可以通过这张Facemesh来制作特定的效果。

后来发现ARKit中实际上是有提供原始的Facemesh顶点位置信息的,这里就想要探究一下这些顶点信息的位置分布关系。

绘制ARKit中的人脸点

首先找到ARFaceGeometry这个类的定义:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52/**

An object representing the geometry of a face.

@discussion The face geometry will have a constant number of triangles

and vertices, updating only the vertex positions from frame to frame.

*/

API_AVAILABLE(ios(11.0))

@interface ARFaceGeometry : NSObject<NSSecureCoding, NSCopying>

/**

The number of mesh vertices of the geometry.

*/

@property (nonatomic, readonly) NSUInteger vertexCount NS_REFINED_FOR_SWIFT;

/**

The mesh vertices of the geometry.

*/

@property (nonatomic, readonly) const simd_float3 *vertices NS_REFINED_FOR_SWIFT;

/**

The number of texture coordinates of the face geometry.

*/

@property (nonatomic, readonly) NSUInteger textureCoordinateCount NS_REFINED_FOR_SWIFT;

/**

The texture coordinates of the geometry.

*/

@property (nonatomic, readonly) const simd_float2 *textureCoordinates NS_REFINED_FOR_SWIFT;

/**

The number of triangles of the face geometry.

*/

@property (nonatomic, readonly) NSUInteger triangleCount;

/**

The triangle indices of the geometry.

*/

@property (nonatomic, readonly) const int16_t *triangleIndices NS_REFINED_FOR_SWIFT;

/**

Creates and returns a face geometry by applying a set of given blend shape coefficients.

@discussion An empty dictionary can be provided to create a neutral face geometry.

@param blendShapes A dictionary of blend shape coefficients.

@return Face geometry after applying the blend shapes.

*/

- (nullable instancetype)initWithBlendShapes:(NSDictionary<ARBlendShapeLocation, NSNumber*> *)blendShapes;

/** Unavailable */

- (instancetype)init NS_UNAVAILABLE;

+ (instancetype)new NS_UNAVAILABLE;

@end

这个类表示表示ARFace的几何形状,其中面部几何形状具有恒定数量的三角形图元和顶点坐标,仅在帧之间更新顶点位置。这个类的属性和注释说明都表明了ARKit会通过这个类返回一个固定大小的顶点数据且当位置变动的时候仅更新对应位置的数值。

这个就意味着我们可以像其他框架一样标注出ARKit的人脸点及其索引之间的关系。下面来分析一下这个类中能获取什么信息:

- 从人脸三角形图元的顶点数组

simd_float3 *vertices中发现存在1220个顶点坐标,即在ARKit中会存在1220个人脸点。 - Apple通过blendShapes帮我们定义了一部分人脸点组合成的动作,比如眨眼睛、抬头等等。

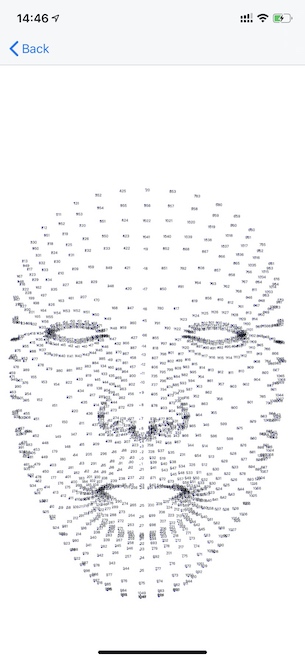

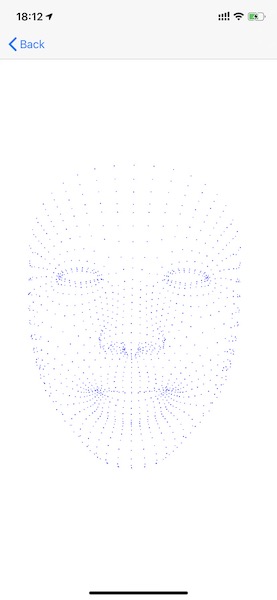

接着我们通过ARKit+Metal将这里面的人脸点给渲染出来,效果如下图:

ARKit人脸点位置探究

接下来这里就要探究vertices中顶点与它索引位置关系。假如是固定位置分布的话,那我们完全可以直接通过标注出ARKit中人脸点位置然后做一些更精细的效果(不使用苹果封装的效果),在相关业务中还是有比较大的应用价值的。

那么具体要怎么找到这张Facemesh上的位置分布关系呢?

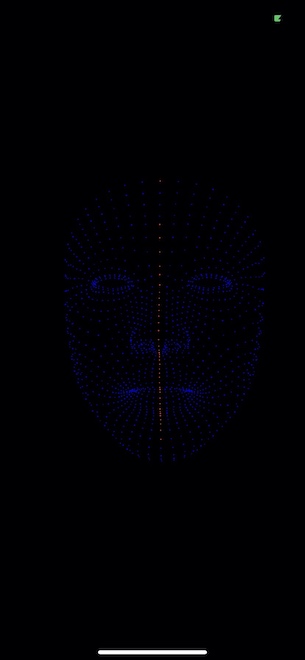

方案一:利用Metal中[[vertex_id]]这个内建变量提供的顶点索引,然后通过不同的颜色来标记每个顶点找出它们的分布关系。1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23struct FaceInputVertexIO {

float4 position [[position]];

float point_size [[point_size]];

ushort vertexIndex;

};

vertex FaceInputVertexIO facePointVertex(const device vector_float3 *facePoint [[buffer(kBufferIndexGenerics)]],

constant FaceMeshUniforms &faceMeshUniforms [[buffer(kBufferIndexFaceMeshUniforms)]],

ushort vid [[vertex_id]]) {

float4x4 modelViewProjectMatrix = faceMeshUniforms.projectionMatrix * faceMeshUniforms.viewMatrix * faceMeshUniforms.modelMatrix;

FaceInputVertexIO outputVertices;

outputVertices.position = modelViewProjectMatrix * float4(facePoint[vid], 1.0);

outputVertices.point_size = 2.5;

outputVertices.vertexIndex = vid;

return outputVertices;

}

fragment float4 facePointFragment(FaceInputVertexIO fragmentInput [[stage_in]]) {

if (fragmentInput.vertexIndex < 39) {

return float4(1.0, 0.0, 0.0, 1.0);

}

return float4(0.0, 0.0, 1.0, 1.0);

}

下图就是将0-39号点标记出来的样子,不过这样子还是要手动标注人脸点位置索引,效率是在有些低下。

方案二:在每个人脸点的位置上贴一个索引下标数值文本。1

2

3

4

5

6

7

8

9

10

11

12

13

14

15for label in indexLabels {

label.removeFromSuperview()

}

indexLabels.removeAll()

for index in 0..<faceAnchor.geometry.vertices.count {

let facePoint = uniforms.pointee.projectionMatrix * uniforms.pointee.viewMatrix * uniforms.pointee.modelMatrix * vector_float4(faceAnchor.geometry.vertices[index], 1.0)

let label = UILabel(frame: CGRect.zero)

label.text = String(format: "%ld", index)

label.font = UIFont(name: "PingFangSC-Regular", size: 5)

label.sizeToFit()

label.center = CGPoint(x: CGFloat(facePoint.x/facePoint.w + 1.0)/2.0 * facePointRenderView.frame.size.width,

y: CGFloat(-facePoint.y/facePoint.w + 1.0)/2.0 * facePointRenderView.frame.size.height)

view.addSubview(label)

indexLabels.append(label)

}